Speeding up VSCode (extensions) in 2022

I was curious to know if the functionality of VSCode can catch up with the native speed of some editors, such as Sublime. That led me to seek out where some bottlenecks may be and where time is being spent. In this post I look at both the internals and extensions.

VSCode has a broad range of extensions, from knowledge management to image editors, but what does the growing ecosystem mean for raw performance?

It can often be quick and easy to point at the underlying stack (in this case, Electron) and say that’s where problems lay, but I’ve found it isn’t always the case. This post explores a deep dive into the internals, shows areas that can be improved, plus talks through some changes we may see this year. It should be of interest to anybody who is planning to work on an extension or has a general interest in the performance of VSCode.

A note on architecture

The crux of the design is for extensions to run in a separate process to the UI. This way, they’re more free to do their own thing without competing with the core runtime.

They are written in JS and share the same event loop which is advantageous because:

- Extensions don’t need to run all the time, so having a dedicated thread per-extension would be overkill and memory intensive.

- They can yield back control when performing I/O (like reading a file or fetching from the network).

- Sharing memory/configurations between them works a lot easier.

However, It’s still possible for 1 extension to block another. Below we can see that extensions load in the “renderer” process. If Ext 1 holds the event loop (stuck in a loop or long running action), then the subsequent extensions will also be slow to start. Below is a rough layout.

So, the main lifecycle of each extension is to parse the code (starting from the package.json file), instantiate, and call activate. The running time for this can sometimes be around 300ms.

R.A.I.L , the user-centric performance model for web apps, describes 100ms+ “representing a task” (I.E you know something is happening), and 1000ms+ as “users lose focus on the task they are performing”. Now R.A.I.L is a guide for web, but the same applies in apps such as VSCode too. Having 10 extensions all taking around 300ms is not only 3s of startup time but falls into the realm of noticeable delay. Most extensions don’t need to spend that long starting up and these issues can be avoided.

Let’s look at a practical example.

Case Study: Postfix TS

PR: https://github.com/ipatalas/vscode-postfix-ts/pull/52

Postfix TS is an extension that allows you to add completions to the end of already-existing expressions. So data.log becomes console.log(data). It’s fairly straight forward as far as extensions go, so I was intrigued as to why it had some beefy startup times.

First, I started with the “Developer: Startup Performance” command.

This shows you where time is being spent across the application. There is a section near the top just for extensions.

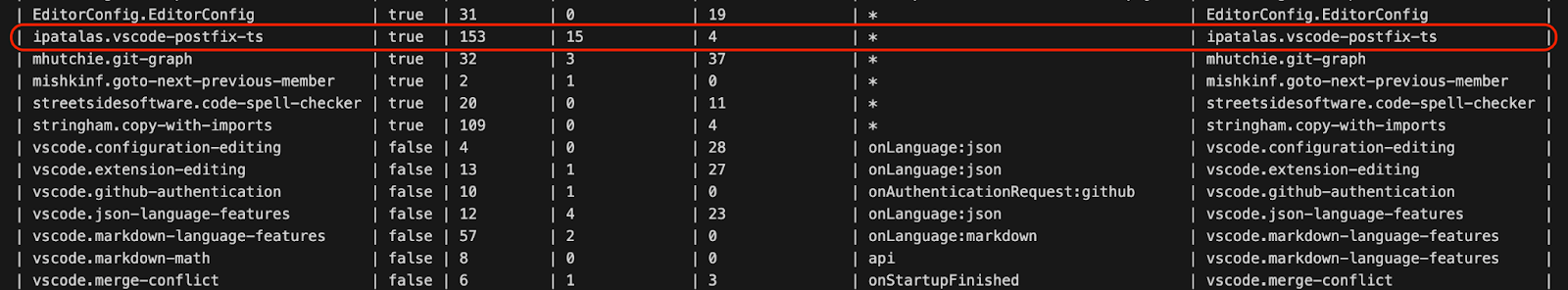

Let’s focus on 3 columns:

- Load Code (Column 3): How long is spent parsing and executing the source code supplied by the extension (in ms). CPU-intensive script parsing and execution can delay not only other extensions, but also user interaction (not to mention cause battery drain if using a laptop or mobile device). In the above image, load code is the third column in, showing the value 153ms.

- Call Activate (Column 4): How long the extension takes to “activate”. This is the fourth column set to 15ms.

- Event (Column 6): What triggered the extension to activate? This is the sixth column with *.

Event

Let’s start with Event. _ is not ideal. _ means the extension starts immediately, competing with other extensions and VSCode itself during startup. This often isn’t needed, as most extensions don’t do anything until called-upon or just run in the background. An exception to the rule is anything that changes the UI. Having a flash of unstyled-to-styled content can be jarring on the web, this UI is no different, things like sudden syntax colour change can be frustrating.

Extensions that offer codelens (like Gitlens) are fine to be delayed, as they are more of an enhancement to the current view. Plus, they’re not really useful until there’s some interaction with the editor (such as selecting a line).

If we imagine the loading of extensions like a queue from the top image, then it makes sense to have visual changes towards the front and then features that require interaction to be near the back. Developing for the web works the same way; fonts and CSS are loaded as early as possible, whereas some JS uses the defer attribute.

VSCode offers a comprehensive range of different activation events for extensions to use, but if you really need a startup hook, then consider using onStartupFinished. This will kick off your extension after VSCode has loaded and will also give other extensions time to start up. Coming back to Postfix TS, it’s only effective on TypeScript/JavaScript files, so there’s no point loading it any time other than when you’re using these languages. So, let’s change the activation event to:

["onLanguage:javascript", "onLanguage:typescript", "onLanguage:javascriptreact", "onLanguage:typescriptreact"]This allows VSCode to ignore the extension if I’m not using those languages and will save me a whole chunk of startup time.

Load Code

In the image above, we see 153ms spent on load code. Now, this is relative, but anything much higher than the others tends to mean no bundling is happening. This is a problem because there’s a cost to opening and closing files. If it’s > 100ms, that starts to become noticeable. Loading 600 small files is much slower than loading one large file. When I unpacked the extension I saw entire projects in there, such as TypeScript (that thing is 50MB!). The node_modules folder was over 60MB and had 1,373 files.

If you’re putting together an extension, there’s nothing wrong with using the tsc CLI. It comes with TypeScript and is fully available without needing other packages. But, once you’re ready to distribute your extension (even for testing), you should switch to a bundler. I’ve found ESbuild is the easiest one to get up and running.

I set up an ESBuild workflow; you can see it here. Now only a single file (extension.js) is generated and published.

Reduce code-gen

I see far too many extensions setting ES6 or ES2015 as a compile target; there is no need for this. ES6 is almost seven years old. Almost no one is using a version of code that old.

Updating the compile target means having less code generated. Since newer syntax doesn’t need to be downlevelled, it also means faster build times, as there’s less work for the code transformer to do.

If you’re unsure which to choose, ES2020 is a good target, as that will cover the last few versions back to April 2021. Be sure to set a minimum version higher than v1.56. Anyone using a version lower will continue to use the previous version of your extension.

Results

Before:

58.4 MB (61,314,657 bytes), 201ms startup time

After:

3.43 MB (3,607,464 bytes), 32ms startup time

We’ve shaved off quite a bit of space and time, but remember, this is just one extension, there were many in this shape. I did the same thing with the Open In Github extension.

Between the 2 extensions that’s just under half a second saved. There’s more improvement opportunities to dig into, some of which aren’t available today but are worth highlighting.

ESModules

We saw above that using a bundler really helps bring down both size and load times. Part of this is due to tree-shaking out unused code. However, sometimes the tree-shaking process wrongly includes code because it can’t confidently know whether to leave something out or not.

Today all extensions are exported as CommonJS, which, due to its dynamic nature, is difficult to optimize for bundling. ESModules are more statically analyzable in comparison due to their import/export syntax being standardized and paths needing to be strings. This, coupled with better loading performance (due to its asynchronous nature), should improve overall load/run times. If you’re using a bundler, it should be a simple case of changing your output from CJS to ESM (don’t do this today though, as it won’t work yet).

When will we see a transition? It seems VSCode may be waiting on TypeScript for full ESModules support. You can follow the issue here (feel free to vote on it). The TypeScript team looks to be aiming for a release once they resolve their remaining concerns. I hope to see both of these happening in 2022.

Tree-sitter

Slow loading of large files can be due to syntactical analysis.

Today tokenization (for syntax highlighting) runs on the main thread, if too much time is spent there things will quickly freeze up, so in order to avoid that the syntax highlighting process will periodically yield back until it’s finished. But why is it slow in the first place?

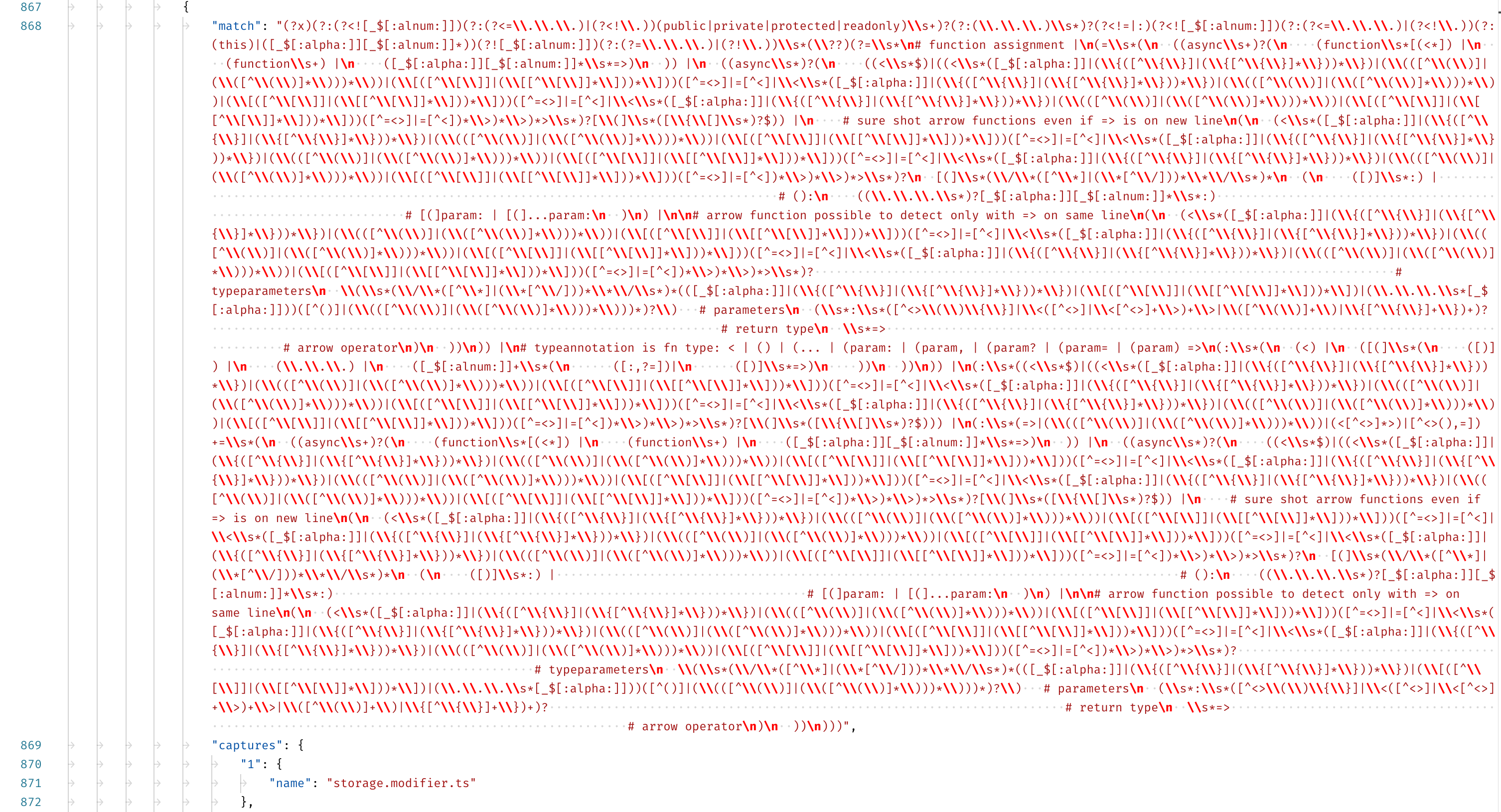

Syntax highlighting uses inefficient textmate grammars which are regex based, these regular expressions can get pretty immense, the example below is from the TypeScript ruleset.

Despite the effort the team have put in to speeding things up, the aging system using these regex grammars is hitting a limit.

The fact that we now have these complex grammars that end up producing beautiful tokens is more of a testament to the amazing computing power available to us than to the design of the [TextMate] grammar semantics.

Alex Dima - Microsoft

Tree-sitter is a new concurrent, incremental parsing system created to solve this problem. The “incremental” bit is note-worthy because it’s designed to handle updates as syntax changes; in fact, it’s fast enough to run on each keystroke. Max Brunfield goes into more detail in his talk about Tree-Sitter here. GitHub migrated to Tree-Sitter for the parsing of syntax and code navigation, NeoVim added experimental support in 2021, and former Atom team members will be moving forward with Zed, a Rust-based text editor that will use Tree-Sitter from the outset.

Web Assembly (WASM)

If you really need to do some CPU intensive work, it’s now possible to offload some of the workload to a language server. This allows you to implement the bulk of your extension in another language (for instance, writing Rust code and compiling it down to WASM).

Thanks to wasm-pack it’s easy to write a Rust extension, or module, and export it. In the following example I am making a change to the Rust code for the server part of the extension which triggers an update, then I can see it being ran on the right hand Code window.

I’ve created a useful template to get started with here: https://github.com/jasonwilliams/hello-wasm

This is made possible not only by wasm-pack, but also by the great esbuild-wasm-pack-plugin that will watch both Rust and TypeScript/JavaScript code, then rebuild on a change.

As Gabe Jackson from OSO explained, bundling to WASM has its advantages. One is that you don’t need to provide a binary for every architecture.

Wrapping Up

So there are changes that can be made today and there are features to look forward to in the future. It will be an interesting year if some of these projects reach prime time. I also believe we’ll see more competition in this space, especially from Zed.

That being said, there are plenty of improvements that can be made in the extensions space today without needing to perform wholesale changes to the architecture.