Building a JS Interpreter in Rust Part 1

Environment Setup

Before we start looking at Rust code, lets go through our dev environment. I use Visual Studio Code with the Rust (RLS) plugin. This plugin is fantastic, and covers pretty much everything you need Rust wise, the Rust Language Server sets itself up mostly, but its worth checking out the Github page and following the steps.

If Sublime is more your thing, check out the Rust Enhanced plugin for Sublime Text 3. It has virtually the same functionality, and with a bit of extra setup you can get the Rust Language Server running too.

I also use Rustup, Rustup is great for managing your Rust version, switching to nightly/stable or adding some additional toolchains.

Finally, to follow along just clone https://github.com/jasonwilliams/boa

Lexical Scanning

When writing an interpreter or a compiler for any language, you usually need to start with a lexer and a parser. Boa here is no different, our first task will be to do the same but what do these do?

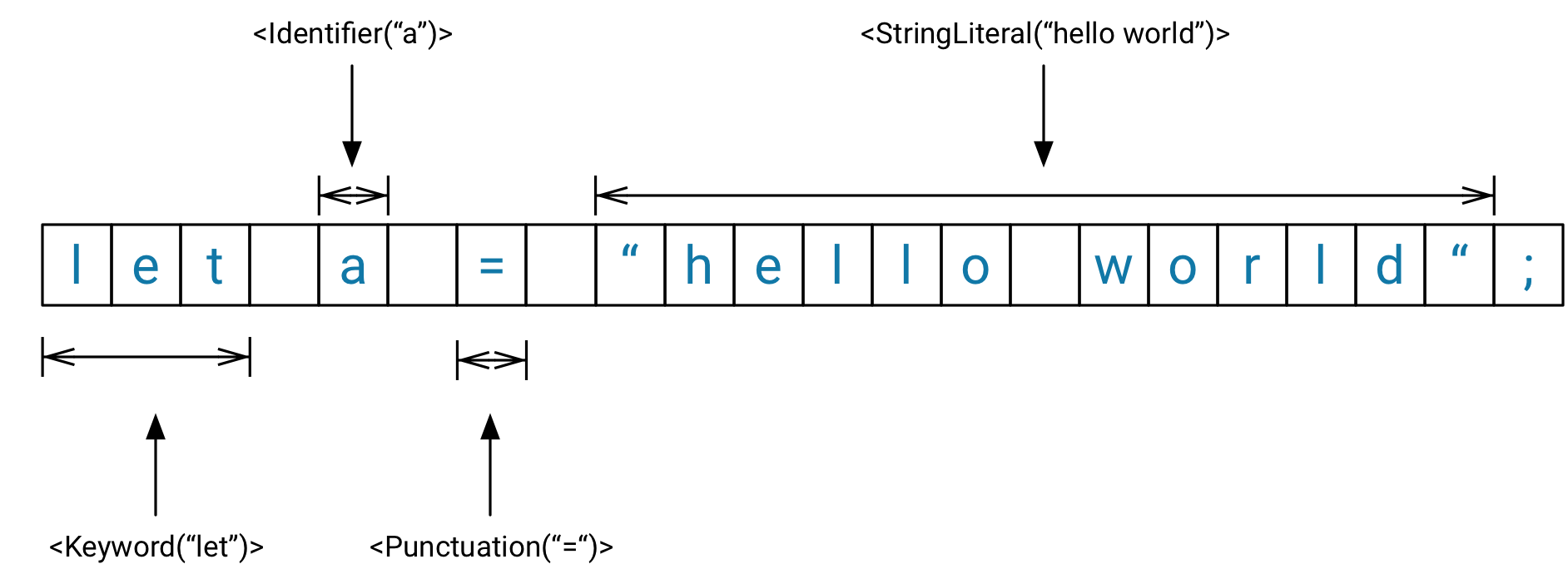

A lexer takes a stream of characters and breaks them up into tokens. Tokens are usually split by delimiters in the source code, such as whitespace, new lines or commas. There are plenty of libraries out there that can do this for you, even in Rust, and its recommended to use one, but as a learning exercise I’ve decided to write one from scratch.

Could we use regular expressions for doing this?

Regular expression engines are big, complicated, and usually overkill for this sort of operation. They can also be expensive if our only question is “What is the next character?”. Writing a loop is something that can optimise quite well and will be more lightweight than calling into the regex engine on every unit of text.

Rob Pike explains it best here

Reading the source code

let buffer = read_to_string("tests/js/test.js").unwrap();We use Rust’s fs::read_to_string method to get the contents of the JS file into a String buffer, it returns a Result type, so we need to unwrap that back into the String. unwrap will attempt to return the wrapped String value, but if it fails it will emit an error and panic. For production code this isn’t great practise, as we want to deal with our errors or at least give a better message. We can improve this by changing unwrap to expect.

let buffer = read_to_string("tests/js/test.js").expect("Unable to read file");Token Implementation

A token can be a string taken from the source code with some added metadata which gives it context. For example, instead of “function” a token would be {value: “function”, type: “keyword”, line: 2, column: 17}. As you can see a token is just like an object which has some added context around some text, they usually have a type, which would be “keyword”, “Boolean”, “Punctuation” etc, these types help the parser going forward.

We can use a struct to represent a token, if you’re coming from a JavaScript background you can think of a struct like an Object, or better yet a constructor, we can make instances of these. The pub is so that it can be publicly exported from the module its written in, similar to export in javascript. Properties are private by default, so we should make these public too. Here we’re declaring the data types this struct will hold, we can then populate them later.

pub struct Token {

pub data: TokenData,

pub pos: Position

}Below shows TokenData represented as an enum. These are really useful when you have a group or collection of things you want to store as types within a namespace. The different values within the enum are called variants and they are always namespaced under their identifier, so if you wanted to use EOF it would be TokenData::EOF, we can also store the data directly inside the enum variant. If you search through the codebase you can see these being used.

/// Represents the type of Token

pub enum TokenData {

/// A boolean literal, which is either `true` or `false`

BooleanLiteral(bool),

/// The end of the file

EOF,

/// An identifier

Identifier(String),

/// A keyword

Keyword(Keyword),

/// A `null` literal

NullLiteral,

/// A numeric literal

NumericLiteral(f64),

/// A piece of punctuation

Punctuator(Punctuator),

/// A string literal

StringLiteral(String),

/// A regular expression

RegularExpression(String),

/// A comment

Comment(String),

}Position is pretty easy, we just create a struct holding column number and line number, both are unsigned 64 bit integers.

pub struct Position {

// Column number

pub column_number: u64,

// Line number

pub line_number: u64,

}So we are working our way through source code, taking each unit of text at a time, generating a token, then adding it to a vector (a growable array on the heap). The bulk of this happens here within the lex function. lex starts a loop which contains a match expression (similar to a switch/case in JavaScript), we then compare every character we come across and do some action against it, each action returns a new token. We keep going until we have no more characters in our buffer left!

In the example below let is an interesting unit to tokenise because it could either be a identifier or a keyword. We know its not a string because there are no quotes around it, its not a number or some punctuation, nor is it a boolean. What we do here is check if it exists in our Keyword’s enum (by calling FromStr which keywords implements). If we get a Keyword value we know we’re sitting on top of a keyword, else we assume its an Identifier. For each token we process, we update the column and row values so they are always correct.

We have now generated a vector of tokens, we can pass these to the Parser to deal with. More on that next time.

Esprit

Quick shoutout to Esprit.

Going forward I may farm out the work of lexing/parsing to a separate library, Esprit was created by Dave Herman, who’s also on the TC39 committee. It is a lexing and parsing library for JS written in Rust, it works in a very similar fashion to what I explained above.